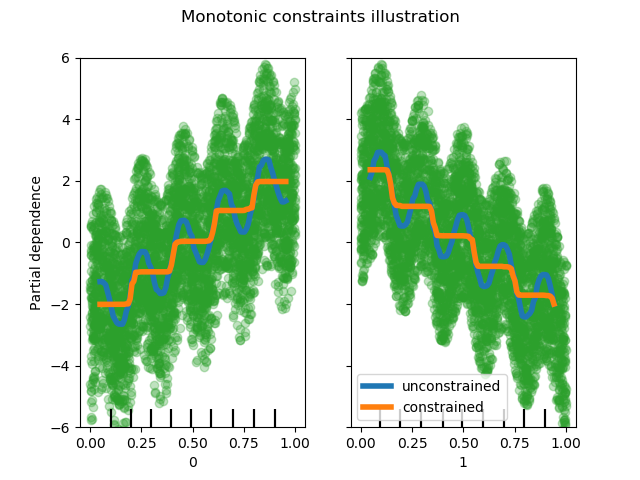

单调约束¶

这个例子说明了单调约束对梯度提升估计器的影响。

我们创建了一个人工数据集,其中目标值一般与第一个特征正相关(具有一些随机和非随机变化),而在一般情况下与第二个特征呈负相关。

通过在学习过程中对特征施加正(增)或负(减)约束,估计器能够正确地跟随总趋势,而不受变化的影响。

此示例的灵感来源于 XGBoost documentation.

from sklearn.experimental import enable_hist_gradient_boosting # noqa

from sklearn.ensemble import HistGradientBoostingRegressor

from sklearn.inspection import plot_partial_dependence

import numpy as np

import matplotlib.pyplot as plt

print(__doc__)

rng = np.random.RandomState(0)

n_samples = 5000

f_0 = rng.rand(n_samples) # positive correlation with y

f_1 = rng.rand(n_samples) # negative correlation with y

X = np.c_[f_0, f_1]

noise = rng.normal(loc=0.0, scale=0.01, size=n_samples)

y = (5 * f_0 + np.sin(10 * np.pi * f_0) -

5 * f_1 - np.cos(10 * np.pi * f_1) +

noise)

fig, ax = plt.subplots()

# Without any constraint

gbdt = HistGradientBoostingRegressor()

gbdt.fit(X, y)

disp = plot_partial_dependence(

gbdt, X, features=[0, 1],

line_kw={'linewidth': 4, 'label': 'unconstrained'},

ax=ax)

# With positive and negative constraints

gbdt = HistGradientBoostingRegressor(monotonic_cst=[1, -1])

gbdt.fit(X, y)

plot_partial_dependence(

gbdt, X, features=[0, 1],

feature_names=('First feature\nPositive constraint',

'Second feature\nNegtive constraint'),

line_kw={'linewidth': 4, 'label': 'constrained'},

ax=disp.axes_)

for f_idx in (0, 1):

disp.axes_[0, f_idx].plot(X[:, f_idx], y, 'o', alpha=.3, zorder=-1)

disp.axes_[0, f_idx].set_ylim(-6, 6)

plt.legend()

fig.suptitle("Monotonic constraints illustration")

plt.show()

脚本的总运行时间:(0分0.876秒)