保险索赔的推断回归¶

此示例演示了Poisson、Gamma和Tweedie回归在法国汽车第三方责任索赔数据集上的使用,并受R教程[1]的启发。

在这个数据集中,每个样本对应于保险单,即保险公司和个人(投保人)内的合同。可用的功能包括驾驶年龄,车辆年龄,车辆动力等。

几个定义:索赔是指投保人向保险公司提出的赔偿保险损失的请求。索赔额是保险公司必须支付的金额。风险敞口是以年数为单位的某一特定保单的保险承保期限。

在这里,我们的目标是预测期望值,也就是每一敞口单位索赔总额的平均值,也称为纯保费。

有几种可能做到这一点,其中两种是:

用泊松分布对索赔数量进行建模,将每项索赔的平均索赔额(也称为严重程度)建模为Gamma分布,并将两者的预测相乘,以得到索赔总额。 直接模拟每次曝光的总索赔额,通常使用Twedie Power的Twedie分布。

在这个例子中,我们将说明这两种方法。我们首先定义一些用于加载数据和可视化结果的辅助函数。

1 A. Noll, R. Salzmann and M.V. Wuthrich, Case Study: French Motor Third-Party Liability Claims (November 8, 2018). doi:10.2139/ssrn.3164764

print(__doc__)

# Authors: Christian Lorentzen <lorentzen.ch@gmail.com>

# Roman Yurchak <rth.yurchak@gmail.com>

# Olivier Grisel <olivier.grisel@ensta.org>

# License: BSD 3 clause

from functools import partial

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.datasets import fetch_openml

from sklearn.compose import ColumnTransformer

from sklearn.linear_model import PoissonRegressor, GammaRegressor

from sklearn.linear_model import TweedieRegressor

from sklearn.metrics import mean_tweedie_deviance

from sklearn.model_selection import train_test_split

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import FunctionTransformer, OneHotEncoder

from sklearn.preprocessing import StandardScaler, KBinsDiscretizer

from sklearn.metrics import mean_absolute_error, mean_squared_error, auc

def load_mtpl2(n_samples=100000):

"""Fetch the French Motor Third-Party Liability Claims dataset.

Parameters

----------

n_samples: int, default=100000

number of samples to select (for faster run time). Full dataset has

678013 samples.

"""

# freMTPL2freq dataset from https://www.openml.org/d/41214

df_freq = fetch_openml(data_id=41214, as_frame=True)['data']

df_freq['IDpol'] = df_freq['IDpol'].astype(np.int)

df_freq.set_index('IDpol', inplace=True)

# freMTPL2sev dataset from https://www.openml.org/d/41215

df_sev = fetch_openml(data_id=41215, as_frame=True)['data']

# sum ClaimAmount over identical IDs

df_sev = df_sev.groupby('IDpol').sum()

df = df_freq.join(df_sev, how="left")

df["ClaimAmount"].fillna(0, inplace=True)

# unquote string fields

for column_name in df.columns[df.dtypes.values == np.object]:

df[column_name] = df[column_name].str.strip("'")

return df.iloc[:n_samples]

def plot_obs_pred(df, feature, weight, observed, predicted, y_label=None,

title=None, ax=None, fill_legend=False):

"""Plot observed and predicted - aggregated per feature level.

Parameters

----------

df : DataFrame

input data

feature: str

a column name of df for the feature to be plotted

weight : str

column name of df with the values of weights or exposure

observed : str

a column name of df with the observed target

predicted : DataFrame

a dataframe, with the same index as df, with the predicted target

fill_legend : bool, default=False

whether to show fill_between legend

"""

# aggregate observed and predicted variables by feature level

df_ = df.loc[:, [feature, weight]].copy()

df_["observed"] = df[observed] * df[weight]

df_["predicted"] = predicted * df[weight]

df_ = (

df_.groupby([feature])[[weight, "observed", "predicted"]]

.sum()

.assign(observed=lambda x: x["observed"] / x[weight])

.assign(predicted=lambda x: x["predicted"] / x[weight])

)

ax = df_.loc[:, ["observed", "predicted"]].plot(style=".", ax=ax)

y_max = df_.loc[:, ["observed", "predicted"]].values.max() * 0.8

p2 = ax.fill_between(

df_.index,

0,

y_max * df_[weight] / df_[weight].values.max(),

color="g",

alpha=0.1,

)

if fill_legend:

ax.legend([p2], ["{} distribution".format(feature)])

ax.set(

ylabel=y_label if y_label is not None else None,

title=title if title is not None else "Train: Observed vs Predicted",

)

def score_estimator(

estimator, X_train, X_test, df_train, df_test, target, weights,

tweedie_powers=None,

):

"""Evaluate an estimator on train and test sets with different metrics"""

metrics = [

("D² explained", None), # Use default scorer if it exists

("mean abs. error", mean_absolute_error),

("mean squared error", mean_squared_error),

]

if tweedie_powers:

metrics += [(

"mean Tweedie dev p={:.4f}".format(power),

partial(mean_tweedie_deviance, power=power)

) for power in tweedie_powers]

res = []

for subset_label, X, df in [

("train", X_train, df_train),

("test", X_test, df_test),

]:

y, _weights = df[target], df[weights]

for score_label, metric in metrics:

if isinstance(estimator, tuple) and len(estimator) == 2:

# Score the model consisting of the product of frequency and

# severity models.

est_freq, est_sev = estimator

y_pred = est_freq.predict(X) * est_sev.predict(X)

else:

y_pred = estimator.predict(X)

if metric is None:

if not hasattr(estimator, "score"):

continue

score = estimator.score(X, y, sample_weight=_weights)

else:

score = metric(y, y_pred, sample_weight=_weights)

res.append(

{"subset": subset_label, "metric": score_label, "score": score}

)

res = (

pd.DataFrame(res)

.set_index(["metric", "subset"])

.score.unstack(-1)

.round(4)

.loc[:, ['train', 'test']]

)

return res

加载数据集、基本特征提取和目标定义

我们通过将包含索赔数量(ClaimNb)的freMTPL2freq表与包含相同策略ID(IDpol)的索赔额(ClaimAMount)的freMTPL2sev表连接起来,构建freMTPL2数据集。

df = load_mtpl2(n_samples=60000)

# Note: filter out claims with zero amount, as the severity model

# requires strictly positive target values.

df.loc[(df["ClaimAmount"] == 0) & (df["ClaimNb"] >= 1), "ClaimNb"] = 0

# Correct for unreasonable observations (that might be data error)

# and a few exceptionally large claim amounts

df["ClaimNb"] = df["ClaimNb"].clip(upper=4)

df["Exposure"] = df["Exposure"].clip(upper=1)

df["ClaimAmount"] = df["ClaimAmount"].clip(upper=200000)

log_scale_transformer = make_pipeline(

FunctionTransformer(func=np.log),

StandardScaler()

)

column_trans = ColumnTransformer(

[

("binned_numeric", KBinsDiscretizer(n_bins=10),

["VehAge", "DrivAge"]),

("onehot_categorical", OneHotEncoder(),

["VehBrand", "VehPower", "VehGas", "Region", "Area"]),

("passthrough_numeric", "passthrough",

["BonusMalus"]),

("log_scaled_numeric", log_scale_transformer,

["Density"]),

],

remainder="drop",

)

X = column_trans.fit_transform(df)

# Insurances companies are interested in modeling the Pure Premium, that is

# the expected total claim amount per unit of exposure for each policyholder

# in their portfolio:

df["PurePremium"] = df["ClaimAmount"] / df["Exposure"]

# This can be indirectly approximated by a 2-step modeling: the product of the

# Frequency times the average claim amount per claim:

df["Frequency"] = df["ClaimNb"] / df["Exposure"]

df["AvgClaimAmount"] = df["ClaimAmount"] / np.fmax(df["ClaimNb"], 1)

with pd.option_context("display.max_columns", 15):

print(df[df.ClaimAmount > 0].head())

/home/circleci/project/sklearn/datasets/_openml.py:754: UserWarning: Version 1 of dataset freMTPL2freq is inactive, meaning that issues have been found in the dataset. Try using a newer version from this URL: https://www.openml.org/data/v1/download/20649148/freMTPL2freq.arff

warn("Version {} of dataset {} is inactive, meaning that issues have "

/home/circleci/project/sklearn/datasets/_openml.py:754: UserWarning: Version 1 of dataset freMTPL2sev is inactive, meaning that issues have been found in the dataset. Try using a newer version from this URL: https://www.openml.org/data/v1/download/20649149/freMTPL2sev.arff

warn("Version {} of dataset {} is inactive, meaning that issues have "

/home/circleci/project/sklearn/preprocessing/_discretization.py:200: UserWarning: Bins whose width are too small (i.e., <= 1e-8) in feature 0 are removed. Consider decreasing the number of bins.

warnings.warn('Bins whose width are too small (i.e., <= '

ClaimNb Exposure Area VehPower VehAge DrivAge BonusMalus VehBrand \

IDpol

139 1.0 0.75 F 7.0 1.0 61.0 50.0 B12

190 1.0 0.14 B 12.0 5.0 50.0 60.0 B12

414 1.0 0.14 E 4.0 0.0 36.0 85.0 B12

424 2.0 0.62 F 10.0 0.0 51.0 100.0 B12

463 1.0 0.31 A 5.0 0.0 45.0 50.0 B12

VehGas Density Region ClaimAmount PurePremium Frequency \

IDpol

139 Regular 27000.0 R11 303.00 404.000000 1.333333

190 Diesel 56.0 R25 1981.84 14156.000000 7.142857

414 Regular 4792.0 R11 1456.55 10403.928571 7.142857

424 Regular 27000.0 R11 10834.00 17474.193548 3.225806

463 Regular 12.0 R73 3986.67 12860.225806 3.225806

AvgClaimAmount

IDpol

139 303.00

190 1981.84

414 1456.55

424 5417.00

463 3986.67

频率模型-泊松分布

索赔数量(ClaimNb)是一个正整数(包括0)。因此,这个目标可以用泊松分布来建模。然后假定为在给定时间间隔内以恒定速率发生的离散事件的数目(Exposure,以年为单位)。在这里,我们建立了频率 y = ClaimNb / Exposure的模型,该模型仍然是一个(尺度)泊松分布,并以 Exposure a作为sample_weight.。

df_train, df_test, X_train, X_test = train_test_split(df, X, random_state=0)

# The parameters of the model are estimated by minimizing the Poisson deviance

# on the training set via a quasi-Newton solver: l-BFGS. Some of the features

# are collinear, we use a weak penalization to avoid numerical issues.

glm_freq = PoissonRegressor(alpha=1e-3, max_iter=400)

glm_freq.fit(X_train, df_train["Frequency"],

sample_weight=df_train["Exposure"])

scores = score_estimator(

glm_freq,

X_train,

X_test,

df_train,

df_test,

target="Frequency",

weights="Exposure",

)

print("Evaluation of PoissonRegressor on target Frequency")

print(scores)

Evaluation of PoissonRegressor on target Frequency

subset train test

metric

D² explained 0.0590 0.0579

mean abs. error 0.1706 0.1661

mean squared error 0.3041 0.3043

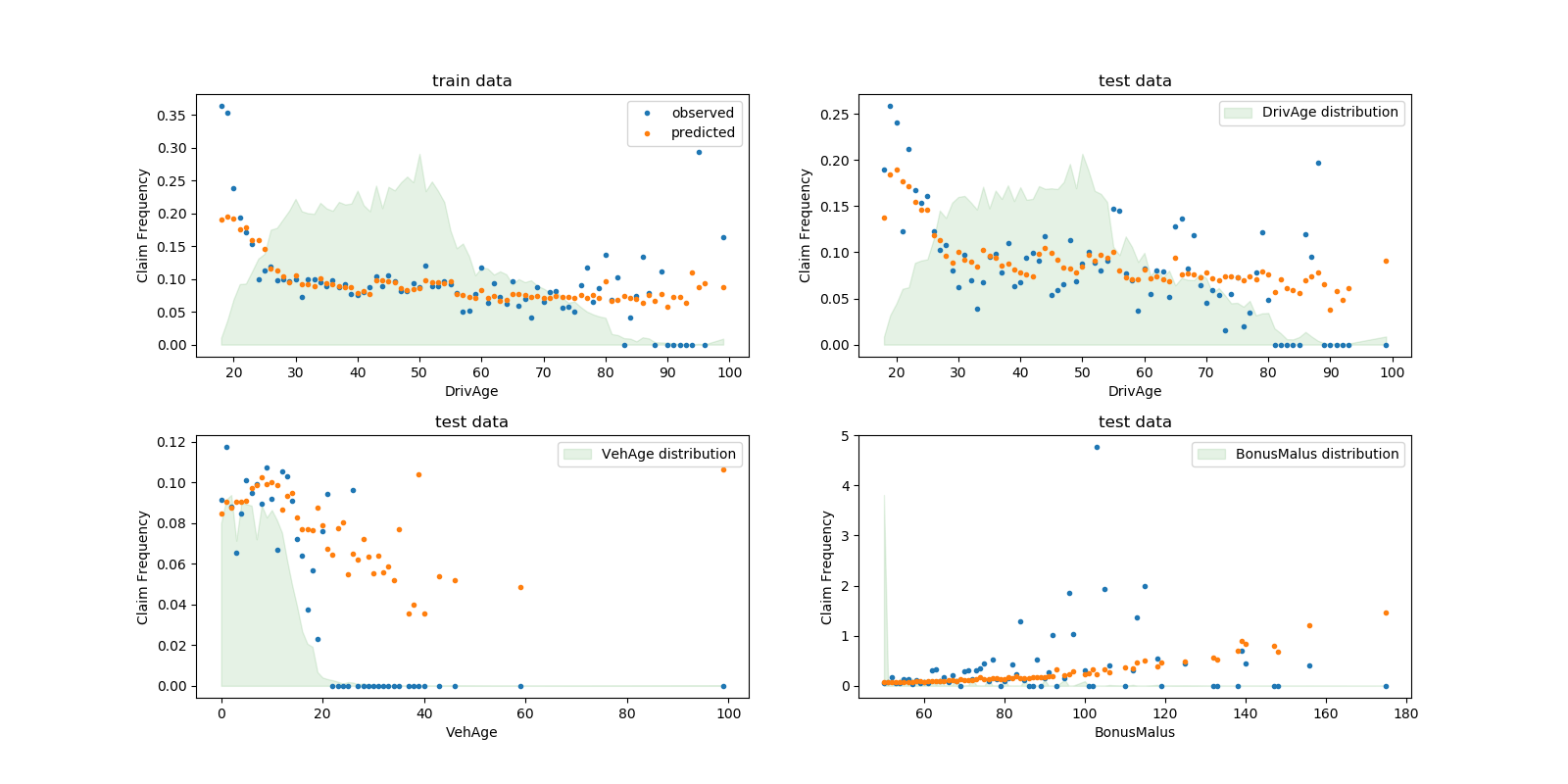

我们可以以驾驶员年龄(DrivAge)、车辆年龄(VehAge)和保险奖金/malus(BonusMalus)来比较观察值和预测值。

fig, ax = plt.subplots(ncols=2, nrows=2, figsize=(16, 8))

fig.subplots_adjust(hspace=0.3, wspace=0.2)

plot_obs_pred(

df=df_train,

feature="DrivAge",

weight="Exposure",

observed="Frequency",

predicted=glm_freq.predict(X_train),

y_label="Claim Frequency",

title="train data",

ax=ax[0, 0],

)

plot_obs_pred(

df=df_test,

feature="DrivAge",

weight="Exposure",

observed="Frequency",

predicted=glm_freq.predict(X_test),

y_label="Claim Frequency",

title="test data",

ax=ax[0, 1],

fill_legend=True

)

plot_obs_pred(

df=df_test,

feature="VehAge",

weight="Exposure",

observed="Frequency",

predicted=glm_freq.predict(X_test),

y_label="Claim Frequency",

title="test data",

ax=ax[1, 0],

fill_legend=True

)

plot_obs_pred(

df=df_test,

feature="BonusMalus",

weight="Exposure",

observed="Frequency",

predicted=glm_freq.predict(X_test),

y_label="Claim Frequency",

title="test data",

ax=ax[1, 1],

fill_legend=True

)

根据观测资料,30岁以下驾驶员发生事故的频率较高,且与

根据观测资料,30岁以下驾驶员发生事故的频率较高,且与BonusMalus变量呈正相关。我们的模型能够在很大程度上正确地模拟这种行为。

严重程度模型-伽玛分布

平均索赔金额或严重程度(AvgClaimAMount)可以在大致显示为遵循Gamma分布。我们用与频率模型相同的特征来拟合严重程度的GLM模型。

注意:

Gamm分布支持,而不是时,我们过滤掉 ClaimAmount == 0。我们使用 ClaimNb作为sample_weight来说明包含多个索赔的保单。

mask_train = df_train["ClaimAmount"] > 0

mask_test = df_test["ClaimAmount"] > 0

glm_sev = GammaRegressor(alpha=10., max_iter=10000)

glm_sev.fit(

X_train[mask_train.values],

df_train.loc[mask_train, "AvgClaimAmount"],

sample_weight=df_train.loc[mask_train, "ClaimNb"],

)

scores = score_estimator(

glm_sev,

X_train[mask_train.values],

X_test[mask_test.values],

df_train[mask_train],

df_test[mask_test],

target="AvgClaimAmount",

weights="ClaimNb",

)

print("Evaluation of GammaRegressor on target AvgClaimAmount")

print(scores)

Evaluation of GammaRegressor on target AvgClaimAmount

subset train test

metric

D² explained 4.300000e-03 -1.380000e-02

mean abs. error 1.699197e+03 2.027923e+03

mean squared error 4.548147e+07 6.094863e+07

在这里,测试数据的分数需要谨慎,因为它们比训练数据要差得多,这表明尽管有很强的正则化,但这些数据任然过拟合。

请注意,得出的模型是每项索赔的平均索赔额。因此,它以至少有一项索赔为条件,一般不能用来预测每一保单的平均索赔额。

print("Mean AvgClaim Amount per policy: %.2f "

% df_train["AvgClaimAmount"].mean())

print("Mean AvgClaim Amount | NbClaim > 0: %.2f"

% df_train["AvgClaimAmount"][df_train["AvgClaimAmount"] > 0].mean())

print("Predicted Mean AvgClaim Amount | NbClaim > 0: %.2f"

% glm_sev.predict(X_train).mean())

Mean AvgClaim Amount per policy: 97.89

Mean AvgClaim Amount | NbClaim > 0: 1899.60

Predicted Mean AvgClaim Amount | NbClaim > 0: 1884.40

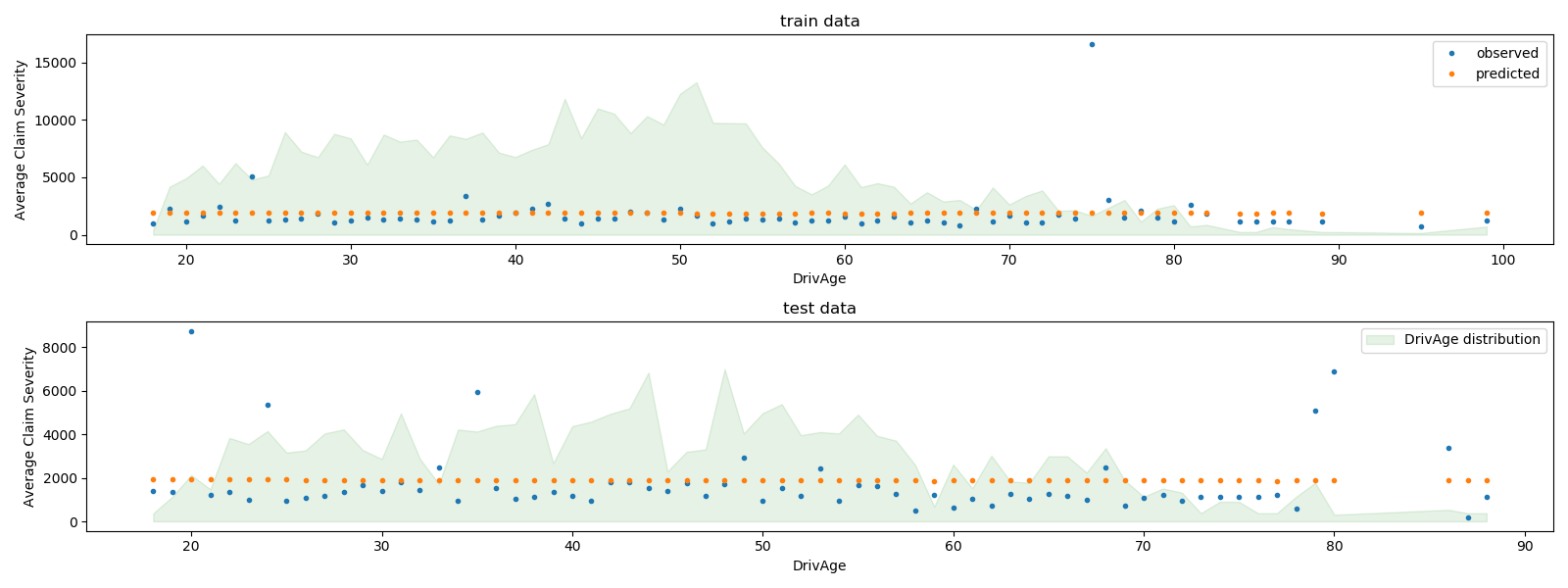

我们可以直观地比较观察值和预测值,并根据驾驶员的年龄进行汇总(DrivAge)。

fig, ax = plt.subplots(ncols=1, nrows=2, figsize=(16, 6))

plot_obs_pred(

df=df_train.loc[mask_train],

feature="DrivAge",

weight="Exposure",

observed="AvgClaimAmount",

predicted=glm_sev.predict(X_train[mask_train.values]),

y_label="Average Claim Severity",

title="train data",

ax=ax[0],

)

plot_obs_pred(

df=df_test.loc[mask_test],

feature="DrivAge",

weight="Exposure",

observed="AvgClaimAmount",

predicted=glm_sev.predict(X_test[mask_test.values]),

y_label="Average Claim Severity",

title="test data",

ax=ax[1],

fill_legend=True

)

plt.tight_layout()

总的来说,司机年龄(

总的来说,司机年龄(DrivAge)对索赔严重程度的影响很小,无论是在观察数据还是预测数据中。

基于产品模型 vs 单Tweedie回归的纯保险费建模

如导言所述,每单位曝露的总索赔额可以通过对严重程度模型的预测,模拟为频率模型预测的乘积。

或者,可以直接用唯一的复合Poisson Gamma广义线性模型(带有日志链接函数)来模拟总损失。该模型是具有“幂”参数的Twedie GLM的特例。在此,我们将Tweedie模型的power参数预先固定为有效范围内的任意值(1.9)。理想情况下,人们可以通过网格搜索来选择这个值,方法是最小化Tweedie模型的对数似然,但是不幸的是,当前的实现不允许这样做(还)。

我们将比较两种方法的性能。为了量化这两种模型的性能,可以计算训练和测试数据的平均偏差,假设索赔总额的复合Poisson-Gamma分布。这与power参数介于1和2之间的Tweedie分布等价。

sklearn.metrics.mean_tweedie_deviance 依赖power参数。在这里,我们计算了一个可能值的网格的平均偏差,并对模型进行了并行比较。我们用相同的power值来比较它们。理想情况下,我们希望无论power如何,一种模式将始终优于另一种模式。

glm_pure_premium = TweedieRegressor(power=1.9, alpha=.1, max_iter=10000)

glm_pure_premium.fit(X_train, df_train["PurePremium"],

sample_weight=df_train["Exposure"])

tweedie_powers = [1.5, 1.7, 1.8, 1.9, 1.99, 1.999, 1.9999]

scores_product_model = score_estimator(

(glm_freq, glm_sev),

X_train,

X_test,

df_train,

df_test,

target="PurePremium",

weights="Exposure",

tweedie_powers=tweedie_powers,

)

scores_glm_pure_premium = score_estimator(

glm_pure_premium,

X_train,

X_test,

df_train,

df_test,

target="PurePremium",

weights="Exposure",

tweedie_powers=tweedie_powers

)

scores = pd.concat([scores_product_model, scores_glm_pure_premium],

axis=1, sort=True,

keys=('Product Model', 'TweedieRegressor'))

print("Evaluation of the Product Model and the Tweedie Regressor "

"on target PurePremium")

with pd.option_context('display.expand_frame_repr', False):

print(scores)

Evaluation of the Product Model and the Tweedie Regressor on target PurePremium

Product Model TweedieRegressor

subset train test train test

D² explained NaN NaN 2.550000e-02 2.480000e-02

mean Tweedie dev p=1.5000 8.217990e+01 8.640200e+01 7.960820e+01 8.618700e+01

mean Tweedie dev p=1.7000 3.833750e+01 3.920430e+01 3.737390e+01 3.917460e+01

mean Tweedie dev p=1.8000 3.106910e+01 3.148750e+01 3.047900e+01 3.148130e+01

mean Tweedie dev p=1.9000 3.396250e+01 3.420610e+01 3.360070e+01 3.420830e+01

mean Tweedie dev p=1.9900 1.989243e+02 1.996420e+02 1.986911e+02 1.996461e+02

mean Tweedie dev p=1.9990 1.886429e+03 1.892749e+03 1.886206e+03 1.892753e+03

mean Tweedie dev p=1.9999 1.876452e+04 1.882692e+04 1.876430e+04 1.882692e+04

mean abs. error 3.247212e+02 3.470205e+02 3.202556e+02 3.397084e+02

mean squared error 1.469184e+08 3.325902e+07 1.469328e+08 3.325471e+07

在本例中,两种建模方法都产生了可比较的性能指标。由于实现原因,产品模型无法获得解释差异的百分比。

此外,我们还可以通过比较、观察和预测测试和训练子集的总索赔量来验证这些模型。我们发现,平均而言,这两种模型都倾向于低估总体索赔(但这种行为取决于正则化的数量)。

res = []

for subset_label, X, df in [

("train", X_train, df_train),

("test", X_test, df_test),

]:

exposure = df["Exposure"].values

res.append(

{

"subset": subset_label,

"observed": df["ClaimAmount"].values.sum(),

"predicted, frequency*severity model": np.sum(

exposure * glm_freq.predict(X) * glm_sev.predict(X)

),

"predicted, tweedie, power=%.2f"

% glm_pure_premium.power: np.sum(

exposure * glm_pure_premium.predict(X)),

}

)

print(pd.DataFrame(res).set_index("subset").T)

subset train test

observed 4.577616e+06 1.725665e+06

predicted, frequency*severity model 4.566477e+06 1.495871e+06

predicted, tweedie, power=1.90 4.451918e+06 1.432048e+06

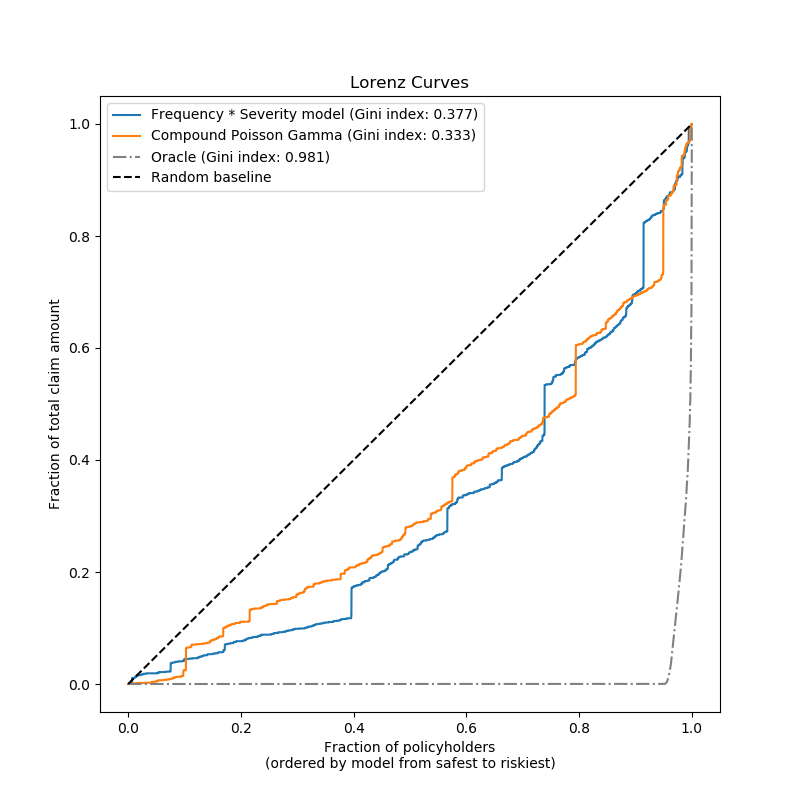

最后,我们可以用累索赔图对这两种模型进行比较:对于每个模型,投保人从最安全到最危险的等级,观察到的累计索赔的比例绘制在y轴上。这个图通常被称为模型的有序Lorenz曲线。

基尼系数(基于曲线下面积)可以作为模型选择指标,量化模型对投保人进行评级的能力。请注意,此指标并不反映模型根据索赔总额的绝对值作出准确预测的能力,而仅以相对金额作为排序指标。

这两种模型都能够按风险程度对投保人进行排序,但由于少量特征预测问题的自然难度较少,因此两者都远未完善。

请注意,GINI指数只表征模型的排名性能,而不是它的校准:预测的任何单调变换都会使模型的基尼指数保持不变。

最后,应该强调的是,直接适用于纯保险费的复合Poisson Gamma模型在操作上更易于开发和维护,因为它包含在单个scikit-learn估计器中,而不是一对模型,每个模型都有自己的一组超参数。

def lorenz_curve(y_true, y_pred, exposure):

y_true, y_pred = np.asarray(y_true), np.asarray(y_pred)

exposure = np.asarray(exposure)

# order samples by increasing predicted risk:

ranking = np.argsort(y_pred)

ranked_exposure = exposure[ranking]

ranked_pure_premium = y_true[ranking]

cumulated_claim_amount = np.cumsum(ranked_pure_premium * ranked_exposure)

cumulated_claim_amount /= cumulated_claim_amount[-1]

cumulated_samples = np.linspace(0, 1, len(cumulated_claim_amount))

return cumulated_samples, cumulated_claim_amount

fig, ax = plt.subplots(figsize=(8, 8))

y_pred_product = glm_freq.predict(X_test) * glm_sev.predict(X_test)

y_pred_total = glm_pure_premium.predict(X_test)

for label, y_pred in [("Frequency * Severity model", y_pred_product),

("Compound Poisson Gamma", y_pred_total)]:

ordered_samples, cum_claims = lorenz_curve(

df_test["PurePremium"], y_pred, df_test["Exposure"])

gini = 1 - 2 * auc(ordered_samples, cum_claims)

label += " (Gini index: {:.3f})".format(gini)

ax.plot(ordered_samples, cum_claims, linestyle="-", label=label)

# Oracle model: y_pred == y_test

ordered_samples, cum_claims = lorenz_curve(

df_test["PurePremium"], df_test["PurePremium"], df_test["Exposure"])

gini = 1 - 2 * auc(ordered_samples, cum_claims)

label = "Oracle (Gini index: {:.3f})".format(gini)

ax.plot(ordered_samples, cum_claims, linestyle="-.", color="gray",

label=label)

# Random baseline

ax.plot([0, 1], [0, 1], linestyle="--", color="black",

label="Random baseline")

ax.set(

title="Lorenz Curves",

xlabel=('Fraction of policyholders\n'

'(ordered by model from safest to riskiest)'),

ylabel='Fraction of total claim amount'

)

ax.legend(loc="upper left")

plt.plot()

[]

脚本的总运行时间:(0分35.831秒)

Download Python source code:plot_tweedie_regression_insurance_claims.py

Download Jupyter notebook:plot_tweedie_regression_insurance_claims.ipynb